User:Trevithj/Negative feedback

Negative feedback is the term widely used to describe the concept of automatic correction or goal-seeking behavior in many natural and artificial systems. The term comes from control theory, where correcting actions are based on a difference between desired and actual performance being fed back to a process. It is referred to as negative feedback because the difference involves in some sense subtracting the actual from the desired measures. General negative feedback systems are studied in control systems engineering. [1][2]

Brief history

[edit]In mercury thermostats (circa 1600), negative feedback was used through the action of expanding columns of mercury to control vents in furnaces, maintaining a steady internal temperature.

In the invisible hand of the market metaphor of economic theory (1776), price movements provide a feedback mechanism to match supply and demand.

In centrifugal governors, negative feedback is used to maintain a near-constant speed of an engine, irrespective of the load or fuel-supply conditions.

In servomechanisms feedback is used to track either the speed or position of an output actuator to an input from a sensor, or from a user setting.

In audio amplifiers, negative feedback reduces distortion, minimises the effect of manufacturing variations in component parameters, and compensates for changes in characteristics due to temperature change.

In analog computing feedback around operational amplifiers is used to generate mathematical functions such as addition, subtraction, integration, differentiation, logarithm, and antilog functions.

In a phase locked loop feedback is used to maintain a generated alternating waveform in a constant phase to a reference signal. In many implementations the generated waveform is the output, but when used as a demodulator in a FM radio receiver, the error feedback voltage serves as the demodulated output signal. If there is a frequency divider between the generated waveform and the phase comparator, the device acts as a frequency multiplier.

In organisms, feedback enables various measures (eg body temperature, or blood sugar level) to be maintained within precise desired ranges by homeostatic processes.

History

[edit]Negative feedback as a control technique may be seen in the refinements of the water clock introduced by Ktesibios of Alexandria in the 3rd century BCE. Self-regulating mechanisms have existed since antiquity, and were used to maintain a constant level in the reservoirs of water clocks as early as 200 BCE.[3]

Negative feedback was implemented in the 17th Century. Cornelius Drebbel had built thermostatically-controlled incubators and ovens in the early 1600s,[4] and centrifugal governors were used to regulate the distance and pressure between millstones in windmills.[5] James Watt patented a form of governor in 1788 to control the speed of his steam engine, and James Clerk Maxwell in 1868 described "component motions" associated with these governors that lead to a decrease in a disturbance or the amplitude of an oscillation.[6]

The term "feedback" was well established by the 1920s, in reference to a means of boosting the gain of an electronic amplifier.[7] Friis and Jensen described this action as "positive feedback" and made passing mention of a contrasting "negative feed-back action" in 1924.[8] Harold Stephen Black detailed the use of negative feedback in electronic amplifiers in 1934, where he defined negative feedback as a type of coupling that reduced the gain of the amplifier, in the process greatly increasing its stability and bandwidth.[9][10] Nyquist and Bode built on Black’s work to develop a theory of amplifier stability, but chose to define "negative" as applying to the polarity of the loop (rather than the effect on the gain), which gave rise to some confusion over basic definitions.[7]

Early researchers in the area of cybernetics subsequently generalized the idea of negative feedback to cover any goal-seeking or purposeful behavior.[11]

All purposeful behavior may be considered to require negative feed-back. If a goal is to be attained, some signals from the goal are necessary at some time to direct the behavior.

Cybernetics pioneer Norbert Wiener helped to formalize the concepts of feedback control, defining feedback in general as "the chain of the transmission and return of information",[12] and negative feedback as the case when:

The information fed back to the control center tends to oppose the departure of the controlled from the controlling quantity...: 97

While the view of feedback as any "circularity of action" helped to keep the theory simple and consistent, Ashby pointed out that, while it may clash with definitions that require a "materially evident" connection, "the exact definition of feedback is nowhere important".[1] Ashby pointed out the limitations of the concept of "feedback":

The concept of 'feedback', so simple and natural in certain elementary cases, becomes artificial and of little use when the interconnections between the parts become more complex...Such complex systems cannot be treated as an interlaced set of more or less independent feedback circuits, but only as a whole. For understanding the general principles of dynamic systems, therefore, the concept of feedback is inadequate in itself. What is important is that complex systems, richly cross-connected internally, have complex behaviors, and that these behaviors can be goal-seeking in complex patterns. : 54

Further confusion arose after BF Skinner introduced the terms positive and negative reinforcement,[13] both of which can be considered negative feedback mechanisms in the sense that they try to minimize deviations from the desired behavior.[14] In a similar context, Herold and Greller used the term "negative" to refer to the valence of the feedback: that is, cases where a subject receives an evaluation with an unpleasant emotional connotation.[15]

A common theme for the 10 items [in the feedback analysis] is their valence, all representing negative feedback. Examples are being removed from a job or suffering some adverse consequence due to poor performance or receiving more or less direct indications of dissatisfaction from co-workers or the supervisor.

To reduce confusion, later authors have suggested alternative terms such as degenerative,[16] self-correcting,[17] balancing,[18] or discrepancy-reducing[19] in place of "negative".

Overview

[edit]

In many physical and biological systems, qualitatively different influences can oppose each other. For example, in biochemistry, one set of chemicals drives the system in a given direction, whereas another set of chemicals drives it in an opposing direction. If one or both of these opposing influences are non-linear, equilibrium point(s) result.

In biology, this process (in general, biochemical) is often referred to as homeostasis; whereas in mechanics, the more common term is equilibrium.

In engineering, mathematics and the physical, and biological sciences, common terms for the points around which the system gravitates include: attractors, stable states, eigenstates/eigenfunctions, equilibrium points, and setpoints.

In control theory, negative refers to the sign of the multiplier in mathematical models for feedback. In delta notation, −Δoutput is added to or mixed into the input. In multivariate systems, vectors help to illustrate how several influences can both partially complement and partially oppose each other.[7]

Some authors, in particular with respect to modelling business systems, use negative to refer to the reduction in difference between the desired and actual behavior of a system.[14][20] In a psychology context, on the other hand, negative refers to the valence of the feedback – attractive versus aversive, or praise versus criticism.[15]

In contrast, positive feedback is feedback in which the system responds so as to increase the magnitude of any particular perturbation, resulting in amplification of the original signal instead of stabilization. Any system in which there is positive feedback together with a gain greater than one will result in a runaway situation. Both positive and negative feedback require a feedback loop to operate.

However, negative feedback systems can still be subject to oscillations. This is caused by the slight delays around any loop. Due to these delays the feedback signal of some frequencies can arrive one half cycle later which will have a similar effect to positive feedback and these frequencies can reinforce themselves and grow over time. This problem is often dealt with by attenuating or changing the phase of the problematic frequencies. Unless the system naturally has sufficient damping, many negative feedback systems have low pass filters or dampers fitted.

Some specific implementations

[edit]There are a large number of different examples of negative feedback and some are discussed below.

Error-controlled regulation

[edit]

One use of feedback is to make a system (say T) self-regulating to minimize the effect of a disturbance (say D). Using a negative feedback loop, a measurement of some variable (for example, a process variable, say E) is subtracted from a required value (the 'set point') to estimate an operational error in system status, which is then used by a regulator (say R) to reduce the gap between the measurement and the required value.[22][23] The regulator modifies the input to the system T according to its interpretation of the error in the status of the system. This error may be introduced by a variety of possible disturbances or 'upsets', some slow and some rapid.[24] The regulation in such systems can range from a simple 'on-off' control to a more complex processing of the error signal.[25]

It may be noted that the physical form of the signals in the system may change from point to point. So, for example, a disturbance (say, a change in weather) to the heat input to a house (as an example of the system T) is interpreted by a thermometer as a change in temperature (as an example of an 'essential variable' E), converted by the thermostat (a 'comparator') into an electrical error in status compared to the 'set point' S, and subsequently used by the regulator (containing a 'controller' that commands gas control valves and an ignitor) ultimately to change the heat provided by a furnace (an 'effector') to counter the initial weather-related disturbance in heat input to the house.

Negative feedback amplifier

[edit]

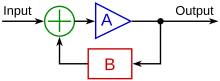

The figure shows a simplified block diagram of a negative feedback amplifier in which the feedback sets the overall ('closed-loop') amplifier gain at a value:

where the approximate value assumes βAOL >> 1, and 1/β as set by the feedback branch is independent of undesirable variations in the 'open-loop' gain AOL (for example, due to manufacturing variations between units, or temperature effects upon components) provided only that this gain is sufficiently large.

The difference signal I–βO that is applied to the open-loop amplifier often is called the "error signal".[27] This difference is given by:

According to this equation, the 'error' is determined by three factors: the input signal I, the feedback fraction β, and the open-loop gain AOL. Even though the negative feedback amplifier does not attempt to correct variations in the open-loop gain by opposing such changes, the feedback circuit does reduce their impact on the overall closed-loop system behavior.[28]

The negative feedback amplifier was invented by Harold Stephen Black at Bell Laboratories in 1927, and granted a patent in 1937.[29]

There are many advantages to feedback in amplifiers.[30] For example, all electronic devices (among them, vacuum tubes, bipolar transistors, MOS transistors) exhibit some nonlinear behavior. Negative feedback corrects this failure by trading unused gain for higher linearity (lower distortion), substituting the smaller, but constant gain 1/β for the nonlinear but large gain AOL. An amplifier with too large an open-loop gain, possibly in a specific frequency range, will result in a large feedback signal in that same range. This feedback signal, when subtracted from the original input, will act to reduce the input to the open-loop amplifier, thereby lowering the overall closed-loop gain. That is, the reduced input, although amplified again by the "too large" open-loop gain AOL, results in an output signal that is amplified by only 1/β. Because the feedback network provides a value of β that is not amplitude dependent (that is, it provides a linear output, directly proportional to its input), the net result is a flattening (desensitizing) of the amplifier's gain over those frequencies such that, of course, βAOL >> 1. Though feedback renders the gain much more predictable, amplifiers with negative feedback can oscillate. See the article on step response. They may even exhibit instability. Harry Nyquist of Bell Laboratories proposed the Nyquist stability criterion and the Nyquist plot to insure that behavior remains stable.

Operational amplifier circuits

[edit]

Almost all operational amplifier circuits employ negative feedback. Since the open-loop gain of an op-amp is extremely large, the tiniest of input signals would drive the output of the amplifier to one rail or the other in the absence of negative feedback. A simple example of the use of feedback is the op-amp voltage amplifier shown in the figure.

The idealized model of an operational amplifier assumes that the gain is infinite, the input impedances are infinite, and input offset currents and voltages are zero. Such an ideal amplifier would draw no current from the resistor divider.[31] The infinite gain of the ideal op-amp means this feedback circuit would drive the voltage difference between the two op-amp inputs to zero.[31] Consequently, the voltage gain of this circuit is derived as:

- .

Practical op-amps are not ideal, but their input impedance is extremely high and they have very high gain. So the model of an ideal op-amp often suffices to understand circuit operation, putting aside assessment of non-ideal behaviors.

If the ideal op-amp is replaced by a realistic op-amp with a finite gain and other distortions, the unwanted disturbances in the amplifier properties that would appear in an open-loop operation of the op-amp are very well suppressed by the external circuit when the op-amp gain is large.[32]

Mechanical engineering

[edit]In modern engineering, negative feedback loops are found in fuel injection systems and carburettors.

Control systems

[edit]

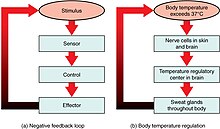

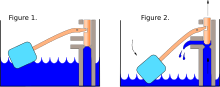

Examples of the use of negative feedback to control its system are: thermostat control, the phase-locked loop oscillator, the ballcock control of water level (see diagram at left), and temperature regulation in animals.

A simple and practical example is a thermostat. When the temperature in a heated room reaches a certain upper limit, the room heating is switched off so that the temperature begins to fall. When the temperature drops to a lower limit, the heating is switched on again. Provided the limits are close to each other, a steady room temperature is maintained. Similar control mechanisms are used in cooling systems, such as an air conditioner, a refrigerator, or a freezer.

Biology and chemistry

[edit]

Some biological systems exhibit negative feedback such as the baroreflex in blood pressure regulation and erythropoiesis. Many biological process (e.g., in the human anatomy) use negative feedback. Examples of this are numerous, from the regulating of body temperature, to the regulating of blood glucose levels. The disruption of feedback loops can lead to undesirable results: in the case of blood glucose levels, if negative feedback fails, the glucose levels in the blood may begin to rise dramatically, thus resulting in diabetes.

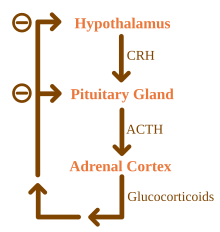

For hormone secretion regulated by the negative feedback loop: when gland X releases hormone X, this stimulates target cells to release hormone Y. When there is an excess of hormone Y, gland X "senses" this and inhibits its release of hormone X. As shown in the figure, most endocrine hormones are controlled by a physiologic negative feedback inhibition loop, such as the glucocorticoids secreted by the adrenal cortex. The hypothalamus secretes corticotropin-releasing hormone (CRH), which directs the anterior pituitary gland to secrete adrenocorticotropic hormone (ACTH). In turn, ACTH directs the adrenal cortex to secrete glucocorticoids, such as cortisol. Glucocorticoids not only perform their respective functions throughout the body but also negatively affect the release of further stimulating secretions of both the hypothalamus and the pituitary gland, effectively reducing the output of glucocorticoids once a sufficient amount has been released.[33]

Self-organization

[edit]Self-organization is the capability of certain systems "of organizing their own behavior or structure".[34] There are many possible factors contributing to this capacity, and most often positive feedback is identified as a possible contributor. However, negative feedback also can play a role.[35]

Economics

[edit]In economics, automatic stabilisers are government programs that are intended to work as negative feedback to dampen fluctuations in real GDP.

Free market economic theorists claim that the pricing mechanism operated to match supply and demand. However Norbert Wiener wrote in 1948:

- "There is a belief current in many countries and elevated to the rank of an official article of faith in the United States that free competition is itself a homeostatic process... Unfortunately the evidence, such as it is, is against this simple-minded theory."[36]

References

[edit]- ^ a b c W. Ross Ashby (1957). "Chapter 12: The error-controlled regulator". Introduction to cybernetics (PDF). Chapman & Hall Ltd.; Internet (1999). pp. 219–243.

- ^ Robert E. Ricklefs, Gary Leon Miller (2000). "§6.1 Homeostasis depends upon negative feedback". Ecology. Macmillan. p. 92. ISBN 9780716728290.

- ^ Breedveld, Peter C. "Port-based modeling of mechatronic systems." Mathematics and Computers in Simulation 66.2 (2004): 99-128.

- ^ "Tierie, Gerrit. Cornelis Drebbel. Amsterdam: HJ Paris, 1932" (PDF). Retrieved 2013-05-03.

- ^ Hills, Richard L (1996), Power From the Wind, Cambridge University Press

- ^

Maxwell, James Clerk (1868). "On Governors" (PDF). 16. Proceedings of the Royal Society of London: 270–283.

{{cite journal}}: Cite journal requires|journal=(help) - ^ a b c David A. Mindell (2002). Between Human and Machine : Feedback, Control, and Computing before Cybernetics. Baltimore, MD, USA: Johns Hopkins University Press. Cite error: The named reference "mindell" was defined multiple times with different content (see the help page).

- ^ Friis,H.T., and A.G.Jensen. "High Frequency Amplifiers" Bell System Technical Journal 3 (April 1924):181-205.

- ^ Black, H.S. (January 1934). "Stabilized Feedback Amplifiers" (PDF). Bell System Tech. J. 13 (1). American Telephone & Telegraph: 1–18. Retrieved January 2, 2013.

- ^ Bennett, Stuart (1993). A History of Control Engineering: 1930-1955. IET. p. 70. ISBN 0863412998.

- ^ Rosenblueth, Arturo, Norbert Wiener, and Julian Bigelow. "Behavior, purpose and teleology." Philosophy of science 10.1 (1943): 18-24.

- ^ Norbert Wiener Cybernetics: Or Control and Communication in the Animal and the Machine. Cambridge, Massachusetts: The Technology Press; New York: John Wiley & Sons, Inc., 1948.

- ^ BF Skinner, The Experimental Analysis of Behavior, American Scientist, Vol. 45, No. 4 (SEPTEMBER 1957), pp. 343-371

- ^ a b Arkalgud Ramaprasad (1983). "On The Definition of Feedback". Behavioral Science. 28 (1). doi:10.1002/bs.3830280103.

- ^ a b Herold, David M., and Martin M. Greller. "Research Notes. Feedback: The definition of a construct." Academy of management Journal 20.1 (1977): 142-147. Cite error: The named reference "herold1977" was defined multiple times with different content (see the help page).

- ^ Hermann A Haus and Richard B. Adler, Circuit Theory of Linear Noisy Networks, MIT Press, 1959

- ^ Peter M. Senge (1990). The Fifth Discipline: The Art and Practice of the Learning Organization. New York: Doubleday. p. 424. ISBN 0-385-26094-6.

- ^

Helen E. Allison, Richard J. Hobbs (2006). Science and Policy in Natural Resource Management: Understanding System Complexity. Cambridge University Press. p. 205. ISBN 9781139458603.

Balancing or negative feedback counteracts and opposes change

- ^ Charles S. Carver, Michael F. Scheier: On the Self-Regulation of Behavior Cambridge University Press, 2001

- ^ John D.Sterman, Business Dynamics: Systems Thinking and Modeling for a Complex World McGraw Hill/Irwin, 2000. ISBN 9780072389159

- ^ Sudheer S Bhagade, Govind Das Nageshwar (2011). Process Dynamics and Control. PHI Learning Pvt. Ltd. pp. 6, 9. ISBN 9788120344051.

- ^

Charles H. Wilts (1960). Principles of Feedback Control. Addison-Wesley Pub. Co. p. 1.

In a simple feedback system a specific physical quantity is being controlled, and control is brought about by making an actual comparison of this quantity with its desired value and utilizing the difference to reduce the error observed. Such a system is self-correcting in the sense that any deviations from the desired performance are used to produce corrective action.

- ^ SK Singh (2010). Process Control: Concepts Dynamics And Applications. PHI Learning Pvt. Ltd. p. 222. ISBN 9788120336780.

- ^

For example, input and load disturbances. See William Y. Svrcek, Donald P. Mahoney, Brent R. Young (2013). A Real-Time Approach to Process Control (3rd ed.). John Wiley & Sons. p. 57. ISBN 9781118684733.

{{cite book}}: CS1 maint: multiple names: authors list (link) - ^ Charles D H Williams. "Types of feedback control". Feedback and temperature control. University of Exeter: Physics and astronomy. Retrieved 2014-06-08.

- ^

Wai-Kai Chen (2005). "Chapter 13: General feedback theory". Circuit Analysis and Feedback Amplifier Theory. CRC Press. p. 13-1. ISBN 9781420037272.

[In a practical amplifier] the forward path may not be strictly unilateral, the feedback path is usually bilateral, and the input and output coupling networks are often complicated.

- ^ Muhammad Rashid (2010). Microelectronic Circuits: Analysis & Design (2nd ed.). Cengage Learning. p. 642. ISBN 9780495667728.

- ^ Santiram Kal (2009). "§6.3.1 Gain stability". Basic Electronics: Devices, Circuits, and IT Fundamentals. PHI Learning Pvt. Ltd. pp. 193–194. ISBN 9788120319523.

- ^ James E Brittain (February 2011). "Electrical engineering hall of fame: Harold S Black" (PDF). Proceedings IEEE. 99 (2): 351–353.

- ^ Santiram Kal (2009). "§6.3 Advantages of negative feedback amplifiers". Basic electronics: Devices, circuits and its fundamentals. PHI Learning Pvt. Ltd. pp. 193 ff. ISBN 9788120319523.

- ^ a b G. Schitter, A. Rankers (2014). "§6.3.4 Linear amplifiers with operational amplifiers". The Design of High Performance Mechatronics. IOS Press. p. 499. ISBN 9781614993681.

- ^ Walter G Jung (2005). "Noise gain (NG)". Op Amp Applications Handbook. Newnes. pp. 12 ff. ISBN 9780750678445.

- ^ Raven, PH; Johnson, GB. Biology, Fifth Edition, Boston: Hill Companies, Inc. 1999. page 1058.

- ^ William R. Uttal (2014). Psychomythics: Sources of Artifacts and Misconceptions in Scientific Psychology. Psychology Press. pp. 95 ff. ISBN 9781135623722.

- ^

Scott Camazine, Jean-Louis Deneubourg, Nigel R Franks, James Sneyd, Guy Theraulaz, Eric Bonabeau (2003). "Chapter 2: How self-organization works". Self-organization in biological systems. Princeton University Press. pp. 15 ff. ISBN 9780691116242.

{{cite book}}: CS1 maint: multiple names: authors list (link) - ^ Cybernetics:_Or_Control_and_Communication_in_the_Animal_and_the_Machine p.158